The DevOps-to-MLOps Transition: The Next Evolution of AI Teams

The ascent of Artificial Intelligence (AI) from research labs to mainstream business applications is transforming how we build, deploy, and manage software. This evolution necessitates a parallel transformation in our engineering practices, particularly within the realm of DevOps. As companies globally embrace AI, the demand for engineers who can bridge the gap between infrastructure management and intelligent systems is rapidly growing.

At eDev, we recognize this critical intersection. Organizations venturing into AI projects are increasingly realizing that traditional DevOps practices need to evolve to accommodate the unique demands of Machine Learning (ML) workflows. This blog will explore the crucial role of DevOps engineers and the emerging field of MLOps engineers in the context of AI projects and provide insights into how companies can effectively build teams to navigate this exciting technological frontier.

The Foundational Role of DevOps Engineers

DevOps engineers are the architects and custodians of the software development and deployment lifecycle. They champion automation, collaboration, and continuous improvement to ensure the efficient and reliable delivery of software applications. In the context of AI projects, their responsibilities remain foundational and encompass:

- Infrastructure Provisioning and Management: DevOps engineers are responsible for setting up and maintaining the underlying infrastructure required to develop, train, and deploy AI models. This includes managing cloud resources, virtual machines, containerization technologies (like Docker and Kubernetes), and networking configurations. The scalability and reliability of this infrastructure are paramount for computationally intensive AI tasks.

- Continuous Integration and Continuous Delivery (CI/CD): Implementing robust CI/CD pipelines is crucial for automating the build, test, and deployment processes for AI applications. DevOps engineers design and manage these pipelines, ensuring that code changes are seamlessly integrated and new versions of AI models and applications can be deployed rapidly and reliably.

- Monitoring and Logging: DevOps engineers establish comprehensive monitoring and logging systems to track the performance and health of AI applications and infrastructure. This includes setting up alerts, analyzing metrics, and troubleshooting issues to ensure the stability and availability of AI-powered services.

- Security and Compliance: Integrating security practices throughout the AI development lifecycle is a key responsibility of DevOps engineers. They implement security measures, manage access controls, and ensure compliance with relevant regulations to protect sensitive data and AI systems.

The Emergence of MLOps Engineers

While DevOps provides a strong foundation, AI projects introduce a new layer of complexity that necessitates specialized skills and practices. This is where MLOps (Machine Learning Operations) comes into play. MLOps engineers are focused on streamlining the entire lifecycle of machine learning models, from experimentation and development to deployment, monitoring, and retraining in production. Their key responsibilities include:

- Model Deployment and Serving: MLOps engineers are responsible for deploying trained AI models into production environments, ensuring they are scalable, reliable, and accessible to other applications. This often involves containerization, API development, and the use of specialized model serving frameworks.

- Model Monitoring and Performance Management: Unlike traditional software, AI model performance can degrade over time due to factors like data drift or concept drift. MLOps engineers implement monitoring systems to track key model metrics (e.g., accuracy, latency, bias) and trigger alerts when performance dips below acceptable thresholds.

- Automated Model Retraining and Updating: MLOps engineers design and automate the process of retraining and updating AI models with new data. This ensures that models remain accurate and relevant over time, a critical aspect of maintaining the effectiveness of AI applications.

- Feature Engineering and Data Pipeline Automation: Collaborating with data scientists, MLOps engineers help build and automate the data pipelines required for feature engineering, ensuring a consistent and reliable flow of data for model training and inference.

- Reproducibility and Version Control for Models: MLOps engineers implement systems for tracking and versioning AI models, datasets, and experimental parameters to ensure reproducibility and facilitate collaboration across data science and engineering teams.

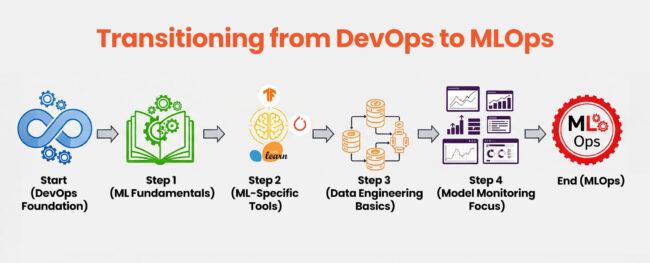

Can DevOps Engineers Evolve into MLOps Engineers for AI Projects?

The answer is a resounding yes, with the right mindset, training, and exposure. DevOps engineers possess a strong foundation in infrastructure management, automation, and CI/CD principles, all of which are highly valuable in the MLOps domain. As noted in a McKinsey report, MLOps builds on DevOps engineering concepts to address AI’s unique characteristics, making the transition a natural extension of their existing skill set. The relationship between MLOps and DevOps is also explained in the IBM article, “ What is MLOps?”

However, the transition requires acquiring specific knowledge and skills related to machine learning workflows. This includes understanding:

- The Machine Learning Lifecycle: Gaining familiarity with the different stages of an ML project, from data collection and preprocessing to model training, evaluation, deployment, and monitoring.

- ML-Specific Tools and Frameworks: Learning about popular ML frameworks (e.g., TensorFlow, PyTorch, scikit-learn), model serving technologies (e.g., TensorFlow Serving, TorchServe), and MLOps platforms.

- Data Engineering Concepts: Understanding data pipelines, feature engineering, and data versioning is crucial for effective MLOps.

Model Monitoring Metrics: Learning to track and interpret ML-specific performance metrics beyond traditional software application metrics.

Achieving a Smooth Transition from DevOps to MLOps

Companies can facilitate a smooth transition for their DevOps engineers into MLOps roles through a strategic approach that includes:

- Targeted Training and Development Programs: Providing access to online courses, workshops, and certifications focused on machine learning, data science fundamentals, and MLOps-specific tools and practices.

- Mentorship and Knowledge Sharing: Pairing interested DevOps engineers with experienced data scientists or MLOps professionals to facilitate knowledge transfer and provide guidance on real-world AI projects.

- Hands-on Experience with AI Projects: Gradually involving DevOps engineers in AI projects, starting with infrastructure setup and deployment tasks, and progressively increasing their responsibilities in model monitoring and automation.

- Dedicated Learning Time: Allocating dedicated time for DevOps engineers to study, experiment with new technologies, and contribute to internal MLOps initiatives.

- Fostering Collaboration: Encouraging close collaboration between DevOps, data science, and software development teams to break down silos and promote a shared understanding of the AI lifecycle.

How eDev Can Help You Hire the Best DevOps and MLOps Engineers

Building a successful AI team requires identifying and recruiting engineers with the right blend of skills and experience. At eDev, we understand the evolving demands of AI projects and have a proven track record of connecting businesses with top-tier DevOps and MLOps talent globally.

We can help your company:

- Define your specific DevOps and MLOps hiring needs based on your AI project roadmap and technical requirements.

- Access a global network of pre-vetted engineers with expertise in cloud infrastructure, automation, CI/CD, containerization, machine learning frameworks, and MLOps best practices.

- Streamline your recruitment process with our efficient sourcing, screening, and interview coordination.

- Find candidates with the right cultural fit for your team and a passion for working on cutting-edge AI technologies.

- Flexible Engagements: Contract, contract‑to‑hire, or full‑time—scale your team as project needs evolve.

- Scale your engineering team quickly and cost-effectively with flexible hiring models and dev-rich tech regions tailored to your budget and project timelines.

By partnering with eDev, you can overcome the challenges of finding skilled DevOps and MLOps engineers and build the high-performing remote teams necessary to transform your infrastructure into an engine for AI-driven intelligence. Contact us today to learn how we can help you navigate the exciting world of AI development.